This is the multi-page printable view of this section. Click here to print.

Concepts

- 1: Development Model

- 1.1: Vehicle App SDK

- 1.2: Vehicle Abstraction Layer (VAL)

- 1.2.1: GRPC Interface Style Guide

- 1.3: Vehicle App Manifest

- 1.3.1: Interfaces

- 1.3.1.1: Vehicle Signal Interface

- 1.3.1.2: gRPC Service Interface

- 1.3.1.3: Publish Subscribe

- 2: Deployment Model

- 3: Lifecycle Management

- 3.1: Project Configuration

- 3.2: Velocitas CLI

- 3.3: Phases

- 3.3.1: Create

- 3.4: Packages

- 3.4.1: Usage

- 3.4.2: Development

- 3.5: Troubleshooting

- 4: Logging guidelines

1 - Development Model

The Velocitas development model is centered around what are known as Vehicle Apps . Automation allows engineers to make high-impact changes frequently and deploy Vehicle Apps through cloud backends as over-the-air updates. The Vehicle App development model is about speed and agility paired with state-of-the-art software quality.

Development Architecture

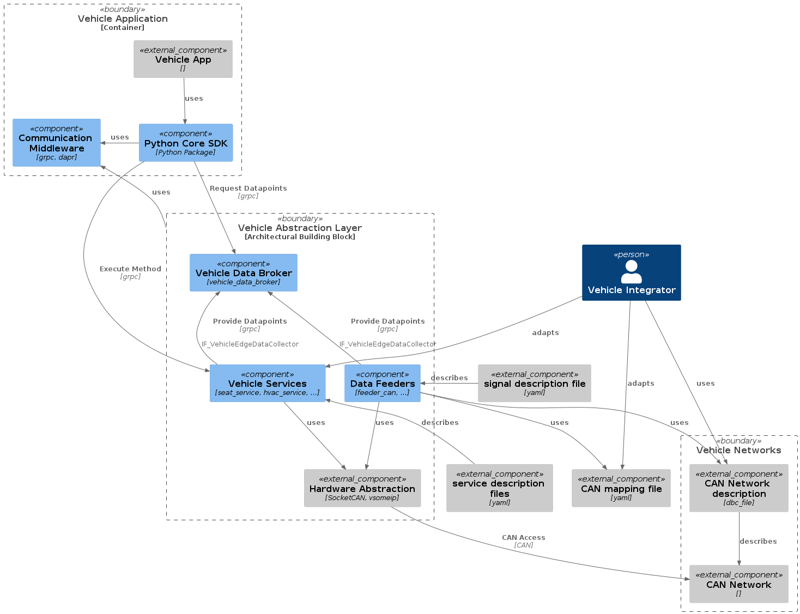

Velocitas provides a flexible development architecture for Vehicle Apps . The following diagram shows the major components of the Velocitas stack.

Vehicle Apps

The Vehicle Applications (Vehicle Apps) contain the business logic that needs to be executed on a vehicle. A Vehicle App is implemented on top of a Vehicle Model and its underlying language-specific SDK . Many concepts of cloud-native and twelve-factor applications apply to Vehicle Apps as well and are summarized in the next chapter.

Vehicle Models

A Vehicle Model makes it possible to easily get vehicle data from the Databroker and to execute remote procedure calls over gRPC against Vehicle Services and other Vehicle Apps . It is generated from the underlying semantic models for a concrete programming language as a graph-based, strongly-typed, intellisense-enabled library. The elements of the vehicle models are defined by the SDKs .

SDKs

Our SDKs, available for different programming languages, are the foundation for the vehicle abstraction provided by the vehicle model Furthermore, they offer abstraction from the underlying middleware and communication protocols, besides providing the base classes and utilities for the Vehicle Apps. SDKs are available for Python and C++, currently. Further SDKs for Rust and C are planned.

Vehicle Services

Vehicle Services provide service interfaces to control actuators or to trigger (complex) actions. E.g. they communicate with the vehicle internal networks like CAN or Ethernet, which are connected to actuators, electronic control units (ECUs) and other vehicle computers (VCs). They may provide a simulation mode to run without a network interface. Vehicle services may feed data to the Databroker and may expose gRPC endpoints, which can be invoked by Vehicle Apps over a Vehicle Model .

KUKSA Databroker

Vehicle data is stored in the KUKSA Databroker conforming to an underlying Semantic Model like VSS . Vehicle Apps can either pull this data or subscribe for updates. In addition, it supports rule-based access to reduce the number of updates sent to the Vehicle App.

Semantic models

The Vehicle Signal Specification ( VSS ) provides a domain taxonomy for vehicle signals and defines the vehicle data semantically, which is exchanged between Vehicle Apps and the Databroker.

The Velocitas SDK is using VSS as the semantic model for the Vehicle Model. Vehicle Service models can be defined with Protobuf service definitions .

Communication Protocols

Asynchronous communication between Vehicle Apps and other vehicle components, as well as cloud connectivity, is facilitated through MQTT messaging. Direct, synchronous communication between Vehicle Apps , Vehicle Services and the Databroker is based on the gRPC protocol.

Middleware Abstraction

Velocitas basically provides middleware abstraction interfaces for service discovery, pubsub messaging, and other cross-cutting functionalites. At the moment, Velocitas just offers a (what we call) “native middleware” implementation, which does not provide (gRPC) service discovery. Instead, addresses and port number of services need to be provided via environment variables to an app; e.g. SDV_VEHICLEDATABROKER_ADDRESS=grpc://localhost:55555. The support of Dapr as middleware has recently been removed.

Vehicle Edge Operating System

Vehicle Apps are expected to run on a Linux -based operating system. An OCI-compliant container runtime is required to host the Vehicle App containers. For publish/subscribe messaging a MQTT broker must be available (e.g., Eclipse Mosquitto ).

Vehicle App Characteristics

The following aspects are important characteristics for Vehicle Apps :

-

Code base: Every Vehicle App is stored in its own repository. Tracked by version control, it can be deployed to multiple environments.

-

Polyglot: Vehicle Apps can be written in any programming language. System-level programming languages like Rust and C/C++ are particularly relevant for limited hardware resources found in vehicles, but higher-level languages like Python and JavaScript are also considered for special use cases.

-

OCI-compliant containers: Vehicle Apps are deployed as OCI-compliant containers. The size of these containers should be minimal to fit on constrained devices.

-

Isolation: Each Vehicle App should execute in its own process and should be self-contained with its interfaces and functionality exposed on its own port.

-

Configurations: Configuration information is separated from the code base of the Vehicle App, so that the same deployment can propagate across environments with their respective configuration applied.

-

Disposability: Favor fast startup and support graceful shutdowns to leave the system in a correct state.

-

Observability: Vehicle Apps provide traces, metrics and logs of every part of the application using Open Telemetry.

-

Over-the-air update capability: Vehicle Apps can be deployed via cloud backends like Pantaris and updated in vehicles frequently over the air through NextGen OTA updates .

Development Process

The starting point for developing Vehicle Apps is a Semantic Model of the vehicle data and vehicle services. Based on the Semantic Model, language-specific Vehicle Models are generated. Vehicle Models are then distributed as packages to the respective package manager of the chosen programming language (e.g. pip, cargo, npm, …).

After a Vehicle Model is available for the chosen programming language, the Vehicle App can be developed using the generated Vehicle Model and its SDK.

Further information

1.1 - Vehicle App SDK

Introduction

The Vehicle App SDK consists of the following building blocks:

-

Vehicle Model Ontology : The SDK provides a set of model base classes for the creation of vehicle models.

-

Middleware integration : Vehicle Models can contain gRPC stubs to communicate with Vehicle Services. gRPC communication is integrated natively.

-

Fluent query & rule construction : Based on a concrete Vehicle Model, the SDK is able to generate queries and rules against the KUKSA Databroker to access the real values of the data points that are defined in the vehicle model.

-

Publish & subscribe messaging : The SDK supports publishing messages to a MQTT broker and subscribing to topics of a MQTT broker.

-

Vehicle App abstraction : Last but not least the SDK provides a

VehicleAppbase class, which every Vehicle App derives from.

An overview of the Vehicle App SDK and its dependencies is depicted in the following diagram:

Vehicle Model Ontology

The Vehicle Model is a tree-based model where every branch in the tree, including the root, is derived from the Model base class provided by the SDK.

The Vehicle Model Ontology consists of the following classes:

Model

A model contains data points (leaves) and other models (branches).

ModelCollection

Info

The ModelCollection is deprecated since SDK v0.4.0. The generated vehicle model must reflect the actual representation of the data points. Please use the Model base class instead.Specifications like VSS support a concept that is called

Instances

. It makes it possible to describe repeating definitions. In DTDL, such kind of structures may be modeled with

Relationships

. In the SDK, these structures are mapped with the ModelCollection class. A ModelCollection is a collection of models, which make it possible to reference an individual model either by a NamedRange (e.g., Row [1-3]), a Dictionary (e.g., “Left”, “Right”) or a combination of both.

Service

Direct asynchronous communication between Vehicle Apps and Vehicle Services is facilitated via the gRPC protocol.

The SDK has its own Service base class, which provides a convenience API layer to access the exposed methods of exactly one gRPC endpoint of a Vehicle Service or another Vehicle App. Please see the

Middleware Integration

section for more details.

DataPoint

DataPoint is the base class for all data points. It corresponds to sensors/actuators/attributes in VSS or telemetry/properties in DTDL.

Data points are the signals that are typically emitted by Vehicle Services or Data Providers.

The representation of a data point is a path starting with the root model, e.g.:

Vehicle.SpeedVehicle.FuelLevelVehicle.Cabin.Seat.Row1.Pos1.Position

Data points are defined as attributes of the model classes. The attribute name is the name of the data point without its path.

Typed DataPoint classes

Every primitive datatype has a corresponding typed data point class, which is derived from DataPoint (e.g., DataPointInt32, DataPointFloat, DataPointBool, DataPointString, etc.).

Example

An example of a Vehicle Model created with the described ontology is shown below:

## import ontology classes

from sdv import (

DataPointDouble,

Model,

Service,

DataPointInt32,

DataPointBool,

DataPointArray,

DataPointString,

)

class Seat(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.Position = DataPointBool("Position", self)

self.IsOccupied = DataPointBool("IsOccupied", self)

self.IsBelted = DataPointBool("IsBelted", self)

self.Height = DataPointInt32("Height", self)

self.Recline = DataPointInt32("Recline", self)

class Cabin(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.DriverPosition = DataPointInt32("DriverPosition", self)

self.Seat = SeatCollection("Seat", self)

class SeatCollection(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.Row1 = self.RowType("Row1", self)

self.Row2 = self.RowType("Row2", self)

def Row(self, index: int):

if index < 1 or index > 2:

raise IndexError(f"Index {index} is out of range")

_options = {

1 : self.Row1,

2 : self.Row2,

}

return _options.get(index)

class RowType(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.Pos1 = Seat("Pos1", self)

self.Pos2 = Seat("Pos2", self)

self.Pos3 = Seat("Pos3", self)

def Pos(self, index: int):

if index < 1 or index > 3:

raise IndexError(f"Index {index} is out of range")

_options = {

1 : self.Pos1,

2 : self.Pos2,

3 : self.Pos3,

}

return _options.get(index)

class VehicleIdentification(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.VIN = DataPointString("VIN", self)

self.Model = DataPointString("Model", self)

class CurrentLocation(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.Latitude = DataPointDouble("Latitude", self)

self.Longitude = DataPointDouble("Longitude", self)

self.Timestamp = DataPointString("Timestamp", self)

self.Altitude = DataPointDouble("Altitude", self)

class Vehicle(Model):

def __init__(self, name, parent):

super().__init__(parent)

self.name = name

self.Speed = DataPointFloat("Speed", self)

self.CurrentLocation = CurrentLocation("CurrentLocation", self)

self.Cabin = Cabin("Cabin", self)

vehicle = Vehicle("Vehicle")#include "sdk/DataPoint.h"

#include "sdk/Model.h"

using namespace velocitas;

class Seat : public Model {

public:

Seat(std::string name, Model* parent)

: Model(name, parent) {}

DataPointBoolean Position{"Position", this};

DataPointBoolean IsOccupied{"IsOccupied", this};

DataPointBoolean IsBelted{"IsBelted", this};

DataPointInt32 Height{"Height", this};

DataPointInt32 Recline{"Recline", this};

};

class CurrentLocation : public Model {

public:

CurrentLocation(Model* parent)

: Model("CurrentLocation", parent) {}

DataPointDouble Latitude{"Latitude", this};

DataPointDouble Longitude{"Longitude", this};

DataPointString Timestamp{"Timestamp", this};

DataPointDouble Altitude{"Altitude", this};

};

class Cabin : public Model {

public:

class SeatCollection : public Model {

public:

class RowType : public Model {

public:

using Model::Model;

Seat Pos1{"Pos1", this};

Seat Pos2{"Pos2", this};

};

SeatCollection(Model* parent)

: Model("Seat", parent) {}

RowType Row1{"Row1", this};

RowType Row2{"Row2", this};

};

Cabin(Model* parent)

: Model("Cabin", parent) {}

DataPointInt32 DriverPosition{"DriverPosition", this};

SeatCollection Seat{this};

};

class Vehicle : public Model {

public:

Vehicle()

: Model("Vehicle") {}

DataPointFloat Speed{"Speed", this};

::CurrentLocation CurrentLocation{this};

::Cabin Cabin{this};

};Middleware integration

gRPC Services

Vehicle Services are expected to expose their public endpoints over the gRPC protocol. The related protobuf definitions are used to generate method stubs for the Vehicle Model to make it possible to call the methods of the Vehicle Services.

Model integration

Info

Please be aware that the integration of Vehicle Services into the overall model is not supported by

automated model lifecycle , currently.

Based on the .proto files of the Vehicle Services, the protocol buffer compiler generates descriptors for all rpcs, messages, fields etc. for the target language.

The gRPC stubs are wrapped by a convenience layer class derived from Service that contains all the methods of the underlying protocol buffer specification.

Info

The convenience layer of C++ is a bit more extensive than in Python. The complexity of gRPC’s async API is hidden behind individualAsyncGrpcFacade implementations which need to be implemented manually. Have a look at the SeatService of the SeatAdjusterApp example and its SeatServiceAsyncGrpcFacade.

class SeatService(Service):

def __init__(self):

super().__init__()

self._stub = SeatsStub(self.channel)

async def Move(self, seat: Seat):

response = await self._stub.Move(

MoveRequest(seat=seat), metadata=self.metadata

)

return responseclass SeatService : public Service {

public:

// nested classes/structs omitted

SeatService(Model* parent)

: Service("SeatService", parent)

, m_asyncGrpcFacade(grpc::CreateChannel("localhost:50051", grpc::InsecureChannelCredentials()))

{

}

AsyncResultPtr_t<VoidResult> move(Seat seat)

{

auto asyncResult = std::make_shared<AsyncResult<VoidResult>>();

m_asyncGrpcFacade->Move(

toGrpcSeat(seat),

[asyncResult](const auto& reply){ asyncResult->insertResult(VoidResult{})}),

[asyncResult](const auto& status){ asyncResult->insertError(toInternalStatus(status))};

return asyncResult;

}

private:

std::shared_ptr<SeatServiceAsyncGrpcFacade> m_asyncGrpcFacade;

};Fluent query & rule construction

A set of query methods like get(), where(), join() etc. are provided through the Model and DataPoint base classes. These functions make it possible to construct SQL-like queries and subscriptions in a fluent language, which are then transmitted through the gRPC interface to the KUKSA Databroker.

Query examples

The following examples show you how to query data points.

Get single data point

driver_pos: int = vehicle.Cabin.DriverPosition.get()

# Call to broker

# GetDataPoint(rule="SELECT Vehicle.Cabin.DriverPosition")auto driverPos = getDataPoints({Vehicle.Cabin.DriverPosition})->await();

// Call to broker:

// GetDataPoint(rule="SELECT Vehicle.Cabin.DriverPosition")

Get data points from multiple branches

vehicle_data = vehicle.CurrentLocation.Latitude.join(

vehicle.CurrentLocation.Longitude).get()

print(f'

Latitude: {vehicle_data.CurrentLocation.Latitude}

Longitude: {vehicle_data.CurrentLocation.Longitude}

')

# Call to broker

# GetDataPoint(rule="SELECT Vehicle.CurrentLocation.Latitude, CurrentLocation.Longitude") auto datapoints =

getDataPoints({Vehicle.CurrentLocation.Latitude, Vehicle.CurrentLocation.Longitude})->await();

// Call to broker:

// GetDataPoint(rule="SELECT Vehicle.CurrentLocation.Latitude, CurrentLocation.Longitude")

Subscription examples

Subscribe and Unsubscribe to a single data point

self.rule = (

await self.vehicle.Cabin.Seat.Row(2).Pos(1).Position

.subscribe(self.on_seat_position_change)

)

def on_seat_position_change(self, data: DataPointReply):

position = data.get(self.vehicle.Cabin.Seat.Row2.Pos1.Position).value

print(f'Seat position changed to {position}')

# Call to broker

# Subscribe(rule="SELECT Vehicle.Cabin.Seat.Row2.Pos1.Position")

# If needed, the subscription can be stopped like this

await self.rule.subscription.unsubscribe()auto subscription =

subscribeDataPoints(

velocitas::QueryBuilder::select(Vehicle.Cabin.Seat.Row(2).Pos(1).Position).build())

->onItem(

[this](auto&& item) { onSeatPositionChanged(std::forward<decltype(item)>(item)); });

// If needed, the subscription can be stopped like this:

subscription->cancel();

void onSeatPositionChanged(const DataPointMap_t datapoints) {

logger().info("SeatPosition has changed to: "+ datapoints.at(Vehicle.Cabin.Seat.Row(2).Pos(1).Position)->asFloat().get());

}Subscribe to a single data point with a filter

Vehicle.Cabin.Seat.Row(2).Pos(1).Position.where(

"Cabin.Seat.Row2.Pos1.Position > 50")

.subscribe(on_seat_position_change)

def on_seat_position_change(data: DataPointReply):

position = data.get(Vehicle.Cabin.Seat.Row2.Pos1.Position).value

print(f'Seat position changed to {position}')

# Call to broker

# Subscribe(rule="SELECT Vehicle.Cabin.Seat.Row2.Pos1.Position WHERE Vehicle.Cabin.Seat.Row2.Pos1.Position > 50")auto query = QueryBuilder::select(Vehicle.Cabin.Seat.Row(2).Pos(1).Position)

.where(vehicle.Cabin.Seat.Row(2).Pos(1).Position)

.gt(50)

.build();

subscribeDataPoints(query)->onItem([this](auto&& item){onSeatPositionChanged(std::forward<decltype(item)>(item));}));

void onSeatPositionChanged(const DataPointMap_t datapoints) {

logger().info("SeatPosition has changed to: "+ datapoints.at(Vehicle.Cabin.Seat.Row(2).Pos(1).Position)->asFloat().get());

}

// Call to broker:

// Subscribe(rule="SELECT Vehicle.Cabin.Seat.Row2.Pos1.Position WHERE Vehicle.Cabin.Seat.Row2.Pos1.Position > 50")

Publish & subscribe messaging

The SDK supports publishing messages to a MQTT broker and subscribing to topics of a MQTT broker. Using the Velocitas SDK, the low-level MQTT communication is abstracted away from the Vehicle App developer. Especially the physical address and port of the MQTT broker is no longer configured in the Vehicle App itself, but rather is set as an environment variable, which is outside of the Vehicle App.

Publish MQTT Messages

MQTT messages can be published easily with the publish_event() method, inherited from VehicleApp base class:

await self.publish_event(

"seatadjuster/currentPosition", json.dumps(req_data))publishToTopic("seatadjuster/currentPosition", "{ \"position\": 40 }");Subscribe to MQTT Topics

In Python subscriptions to MQTT topics can be easily established with the subscribe_topic() annotation. The annotation needs to be applied to a method of the VehicleApp base class. In C++ the subscribeToTopic() method has to be called. Callbacks for onItem and onError can be set. The following examples provide some more details.

@subscribe_topic("seatadjuster/setPosition/request")

async def on_set_position_request_received(self, data: str) -> None:

data = json.loads(data)

logger.info("Set Position Request received: data=%s", data)#include <fmt/core.h>

#include <nlohmann/json.hpp>

subscribeToTopic("seatadjuster/setPosition/request")->onItem([this](auto&& item){

const auto jsonData = nlohmann::json::parse(item);

logger().info(fmt::format("Set Position Request received: data={}", jsonData));

});Vehicle App abstraction

Vehicle Apps are inherited from the VehicleApp base class. This enables the Vehicle App to use the Publish & Subscribe messaging and to connect to the KUKSA Databroker.

The Vehicle Model instance is passed to the constructor of the VehicleApp class and should be stored in a member variable (e.g. self.vehicle for Python, std::shared_ptr<Vehicle> m_vehicle; for C++), to be used by all methods within the application.

Finally, the run() method of the VehicleApp class is called to start the Vehicle App and register all MQTT topic and Databroker subscriptions.

Implementation detail

In Python, the subscriptions are based onasyncio, which makes it necessary to call the run() method with an active asyncio event_loop.

A typical skeleton of a Vehicle App looks like this:

class SeatAdjusterApp(VehicleApp):

def __init__(self, vehicle: Vehicle):

super().__init__()

self.vehicle = vehicle

async def main():

# Main function

logger.info("Starting seat adjuster app...")

seat_adjuster_app = SeatAdjusterApp(vehicle)

await seat_adjuster_app.run()

LOOP = asyncio.get_event_loop()

LOOP.add_signal_handler(signal.SIGTERM, LOOP.stop)

LOOP.run_until_complete(main())

LOOP.close()#include "sdk/VehicleApp.h"

#include "vehicle/Vehicle.hpp"

using namespace velocitas;

class SeatAdjusterApp : public VehicleApp {

public:

SeatAdjusterApp()

: VehicleApp(IVehicleDataBrokerClient::createInstance("vehicledatabroker")),

IPubSubClient::createInstance("localhost:1883", "SeatAdjusterApp"))

{}

private:

::Vehicle Vehicle;

};

int main(int argc, char** argv) {

SeatAdjusterApp app;

app.run();

return 0;

}Further information

- Tutorial: Quickstart

- Tutorial: Vehicle Model Creation

- Tutorial: Vehicle App Development

- Tutorial: Develop and run integration tests for a Vehicle App

1.2 - Vehicle Abstraction Layer (VAL)

Introduction

The Vehicle Abstraction Layer (VAL) enables access to the systems and functions of a vehicle via a unified - or even better - a standardized Vehicle API abstracting from the details of the end-to-end architecture of the vehicle. The unified API enables Vehicle Apps to run on different vehicle architectures of a single OEM. Vehicle Apps can be even implemented OEM-agnostic, if using an API based on a standard like the COVESA Vehicle Signal Specification (VSS) . The Vehicle API eliminates the need to know the source, destination, and format of signals for the vehicle system.

The Eclipse Velocitas project is using the Eclipse KUKSA project . KUKSA does not provide a concrete VAL. That’s up to you as an OEM (vehicle manufacturer) or as a supplier. But KUKSA provides the components and tools that helps you to implement a VAL for your chosen end-to-end architecture. Also, it can support you to simulate the vehicle hardware during the development phase of an Vehicle App or Service.

KUKSA provides you with ready-to-use generic components for the signal-based access to the vehicle, like the KUKSA Databroker and the generic Data Providers (aka Data Feeders). It also provides you reference implementations of certain Vehicle Services, like the Seat Service and the HVAC Service.

Architecture

The image below shows the main components of the VAL and its relation to the Velocitas Development Model .

KUKSA Databroker

The KUKSA Databroker is a gRPC service acting as a broker of vehicle data / signals also called data points in the following. It provides central access to vehicle data points arranged in a - preferably standardized - vehicle data model like the COVESA VSS or others. But this is not a must, it is also possible to use your own (proprietary) vehicle model or to extend the COVESA VSS with your specific extensions via VSS overlays .

Data points represent certain states of a vehicle, like the current vehicle speed or the currently applied gear. Data points can represent sensor values like the vehicle speed or engine temperature, actuators like the wiper mode, and immutable attributes of the vehicle like the needed fuel type(s) of the vehicle, engine displacement, maximum power, etc.

Data points factually belonging together are typically arranged in branches and sub-branches of a tree structure (like this example on the COVESA VSS site).

The KUKSA Databroker is implemented in Rust, can run in a container and provides services to get data points, update data points and for subscribing to automatic notifications on data point changes. Filter- and rule-based subscriptions of data points can be used to reduce the number of updates sent to the subscriber.

Data Providers / Data Feeders

Conceptually, a data provider is the responsible to take care for a certain set of data points: It provides updates of sensor data from the vehicle to the Databroker and forwards updates of actuator values to the vehicle. The set of data points a data provider maintains may depend on the network interface (e.g. CAN bus) via that those data is accessible or it can depend on a certain use case the provider is responsible for (like seat control).

Eclipse KUKSA provides several generic

Data Providers

for different datasources.

As of today, Eclipse Velocitas only utilizes the generic

CAN Provider (KUKSA CAN Provider)

implemented in Python, which reads data from a CAN bus based on mappings specified in e.g. a CAN network description (dbc) file.

The feeder uses a mapping file and data point metadata to convert the source data to data points and injects them into the Databroker using its Collector gRPC interface.

The feeder automatically reconnects to the Databroker in the event that the connection is lost.

Vehicle Services

A vehicle service offers a Vehicle App to interact with the vehicle systems on a RPC-like basis. It can provide service interfaces to control actuators or to trigger (complex) actions, or provide interfaces to get data. It communicates with the Hardware Abstraction to execute the underlying services, but may also interact with the Databroker.

The KUKSA Incubation repository contains examples illustrating how such kind of vehicle services can be built.

Hardware Abstraction

Data feeders rely on hardware abstraction. Hardware abstraction is project/platform specific. The reference implementation relies on SocketCAN and vxcan, see KUKSA CAN Provider . The hardware abstraction may offer replaying (e.g., CAN) data from a file (can dump file) when the respective data source (e.g., CAN) is not available.

Overview of the VAL architecture

Information Flow

The VAL offers an information flow between vehicle networks and vehicle services. The data that can flow is ultimately limited to the data available through the Hardware Abstraction, which is platform/project-specific. The KUKSA Databroker offers read/subscribe access to data points based on a gRPC service. The data points which are actually available are defined by the set of feeders providing the data into the broker. Services (like the seat service ) define which CAN signals they listen to and which CAN signals they send themselves, see documentation . Service implementations may also interact as feeders with the Databroker.

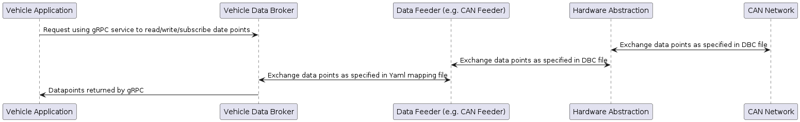

Data flow when a Vehicle App uses the KUKSA Databroker

Architectural representation of the KUKSA Databroker data flow

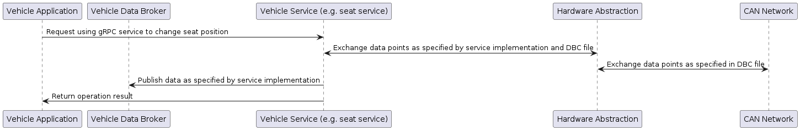

Data flow when a Vehicle App uses a Vehicle Service

Architectural representation of the vehicle service data flow

Source Code

Source code and build instructions are available in the respective KUKSA repositories:

GRPC Interface Style Guide

A style guide is available in the GRPC Interface Style Guide

1.2.1 - GRPC Interface Style Guide

This provides a style guide for .proto files. By following these conventions, you’ll make your protocol buffer message definitions and their corresponding classes consistent and easy to read. Unless otherwise indicated, this style guide is based on the style guide from google protocol-buffers style under Apache 2.0 License & Creative Commons Attribution 4.0 License.

Note that protocol buffer style can evolve over time, so it is likely that you will see .proto files written in different conventions or styles. Please respect the existing style when you modify these files. Consistency is key. However, it is best to adopt the current best style when you are creating a new .proto file.

Standard file formatting

- Keep the line length to 80 characters.

- Use an indent of 2 spaces.

- Prefer the use of double quotes for strings.

File structure

Files should be named lower_snake_case.proto

All files should be ordered in the following manner:

- License header

- File overview

- Syntax

- Package

- Imports (sorted)

- File options

- Everything else

Directory Structure

Files should be stored in a directory structure that matches their package sub-names. All files in a given directory should be in the same package. Below is an example based on the proto files in the kuksa.-databroker repository.

| proto/

| └── sdv

| └── databroker

| └── v1 // package sdv.databroker.broker.v1

| ├── broker.proto // service Broker in sdv.databroker.broker.v1

| ├── collector.proto // service Collector in sdv.databroker.broker.v1

| └── types.proto // type definition and import of in sdv.databroker.broker.v1

The proposed structure shown above is adapted from Uber Protobuf Style Guide V2 under MIT License.

Packages

Package names should be in lowercase. Package names should have unique names based on the project name, and possibly based on the path of the file containing the protocol buffer type definitions.

Message and field names

Use PascalCase (CamelCase with an initial capital) for message names – for example, SongServerRequest. Use underscore_separated_names for field names (including oneof field and extension names) – for example, song_name.

message SongServerRequest {

optional string song_name = 1;

}

Using this naming convention for field names gives you accessors like the following:

C++:

const string& song_name() { ... }

void set_song_name(const string& x) { ... }

If your field name contains a number, the number should appear after the letter instead of after the underscore. For example, use song_name1 instead of song_name_1 Repeated fields

Use pluralized names for repeated fields.

repeated string keys = 1;

...

repeated MyMessage accounts = 17;

Enums

Use PascalCase (with an initial capital) for enum type names and CAPITALS_WITH_UNDERSCORES for value names:

enum FooBar {

FOO_BAR_UNSPECIFIED = 0;

FOO_BAR_FIRST_VALUE = 1;

FOO_BAR_SECOND_VALUE = 2;

}

Each enum value should end with a semicolon, not a comma. The zero value enum should have the suffix UNSPECIFIED.

Services

If your .proto defines an RPC service, you should use PascalCase (with an initial capital) for both the service name and any RPC method names:

service FooService {

rpc GetSomething(GetSomethingRequest) returns (GetSomethingResponse);

rpc ListSomething(ListSomethingRequest) returns (ListSomethingResponse);

}

GRPC Interface Versioning

All API interfaces must provide a major version number, which is encoded at the end of the protobuf package. If an API introduces a breaking change, such as removing or renaming a field, it must increment its API version number to ensure that existing user code does not suddenly break. Note: The use of the term “major version number” above is taken from semantic versioning. However, unlike in traditional semantic versioning, APIs must not expose minor or patch version numbers. For example, APIs use v1, not v1.0, v1.1, or v1.4.2. From a user’s perspective, minor versions are updated in place, and users receive new functionality without migration.

A new major version of an API must not depend on a previous major version of the same API. An API may depend on other APIs, with an expectation that the caller understands the dependency and stability risk associated with those APIs. In this scenario, a stable API version must only depend on stable versions of other APIs.

Different versions of the same API should preferably be able to work at the same time within a single client application for a reasonable transition period. This time period allows the client to transition smoothly to the newer version. An older version must go through a reasonable, well-communicated deprecation period before being shut down.

For releases that have alpha or beta stability, APIs must append the stability level after the major version number in the protobuf package.

Release-based versioning

An individual release is an alpha or beta release that is expected to be available for a limited time period before its functionality is incorporated into the stable channel, after which the individual release will be shut down. When using release-based versioning strategy, an API may have any number of individual releases at each stability level.

Alpha and beta releases must have their stability level appended to the version, followed by an incrementing release number. For example, v1beta1 or v1alpha5. APIs should document the chronological order of these versions in their documentation (such as comments). Each alpha or beta release may be updated in place with backwards-compatible changes. For beta releases, backwards-incompatible updates should be made by incrementing the release number and publishing a new release with the change. For example, if the current version is v1beta1, then v1beta2 is released next.

Adapted from google release-based_versioning under Apache 2.0 License & Creative Commons Attribution 4.0 License

Backwards compatibility

The gRPC protocol is designed to support services that change over time. Generally, additions to gRPC services and methods are non-breaking. Non-breaking changes allow existing clients to continue working without changes. Changing or deleting gRPC services are breaking changes. When gRPC services have breaking changes, clients using that service have to be updated and redeployed.

Making non-breaking changes to a service has a number of benefits:

- Existing clients continue to run.

- Avoids work involved with notifying clients of breaking changes, and updating them.

- Only one version of the service needs to be documented and maintained.

Non-breaking changes

These changes are non-breaking at a gRPC protocol level and binary level.

- Adding a new service

- Adding a new method to a service

- Adding a field to a request message - Fields added to a request message are deserialized with the default value on the server when not set. To be a non-breaking change, the service must succeed when the new field isn’t set by older clients.

- Adding a field to a response message - Fields added to a response message are deserialized into the message’s unknown fields collection on the client.

- Adding a value to an enum - Enums are serialized as a numeric value. New enum values are deserialized on the client to the enum value without an enum name. To be a non-breaking change, older clients must run correctly when receiving the new enum value.

Binary breaking changes

The following changes are non-breaking at a gRPC protocol level, but the client needs to be updated if it upgrades to the latest .proto contract. Binary compatibility is important if you plan to publish a gRPC library.

- Removing a field - Values from a removed field are deserialized to a message’s unknown fields. This isn’t a gRPC protocol breaking change, but the client needs to be updated if it upgrades to the latest contract. It’s important that a removed field number isn’t accidentally reused in the future. To ensure this doesn’t happen, specify deleted field numbers and names on the message using Protobuf’s reserved keyword.

- Renaming a message - Message names aren’t typically sent on the network, so this isn’t a gRPC protocol breaking change. The client will need to be updated if it upgrades to the latest contract. One situation where message names are sent on the network is with Any fields, when the message name is used to identify the message type.

- Nesting or unnesting a message - Message types can be nested. Nesting or unnesting a message changes its message name. Changing how a message type is nested has the same impact on compatibility as renaming.

Protocol breaking changes

The following items are protocol and binary breaking changes:

- Renaming a field - With Protobuf content, the field names are only used in generated code. The field number is used to identify fields on the network. Renaming a field isn’t a protocol breaking change for Protobuf. However, if a server is using JSON content, then renaming a field is a breaking change.

- Changing a field data type - Changing a field’s data type to an incompatible type will cause errors when deserializing the message. Even if the new data type is compatible, it’s likely the client needs to be updated to support the new type if it upgrades to the latest contract.

- Changing a field number - With Protobuf payloads, the field number is used to identify fields on the network.

- Renaming a package, service or method - gRPC uses the package name, service name, and method name to build the URL. The client gets an UNIMPLEMENTED status from the server.

- Removing a service or method - The client gets an UNIMPLEMENTED status from the server when calling the removed method.

Behavior breaking changes

When making non-breaking changes, you must also consider whether older clients can continue working with the new service behavior. For example, adding a new field to a request message:

- Isn’t a protocol breaking change.

- Returning an error status on the server if the new field isn’t set makes it a breaking change for old clients.

Behavior compatibility is determined by your app-specific code.

Adapted from Versioning gRPC services under Creative Commons Attribution 4.0 License

gRPC Error Handling

In gRPC, a large set of error codes has been defined As a general rule, SDV should use relevant gRPC error codes, as described in this thread

return grpc::Status(grpc::StatusCode::NOT_FOUND, "error details here");

Available constructor:

grpc::Status::Status ( StatusCode code,

const std::string & error_message,

const std::string & error_details

The framework for drafting error messages could be useful as a later improvement. This could e.g., be used to specify which unit created the error message and to assure the same structure on all messages. The latter two may e.g., depend on debug settings, e.g., error details only in debug-builds to avoid leaks of sensitive information. A global function like below or similar could handle that and also possibly convert between internal error codes and gRPC codes.

grpc::Status status = CreateStatusMessage(PERMISSION_DENIED,"DataBroker","Rule access rights violated");

SDV error handling for gRPC interfaces (e.g., VAL vehicles services)

- Use gRPC error codes as base

- Document in proto files (as comments) which error codes that the service implementation can emit and the meaning of them. (Errors that only are emitted by the gRPC framework do not need to be listed.)

- Do not - unless there are special reasons - add explicit error/status fields to rpc return messages.

- Additional error information can be given by free text fields in gRPC error codes. Note, however, that sensitive information like

Given password ABCD does not match expected password EFGHshould not be passed in an unprotected/unencrypted manner.

SDV handling of gRPC error codes

The table below gives error code guidelines for each gRPC on:

- If it is relevant for a client to retry the call or not when receiving the error code. Retry is only relevant if the error is of a temporary nature.

- When to use the error code when implementing a service.

| gRPC error code | Retry Relevant? | Recommended SDV usage |

|---|---|---|

| OK | No | Mandatory error code if operation succeeded. Shall never be used if operation failed. |

| CANCELLED | No | No explicit use case on server side in SDV identified |

| UNKNOWN | No | To be used in default-statements when converting errors from e.g., Broker-errors to SDV/gRPC errors |

| INVALID_ARGUMENT | No | E.g., Rule syntax with errors |

| DEADLINE_EXCEEDED | Yes | Only applicable for asynchronous services, i.e. services which wait for completion before the result is returned. The behavior if an operation cannot finish within expected time must be defined. Two options exist. One is to return this error after e.g., X seconds. Another is that the server never gives up, but rather waits for the client to cancel the operation. |

| NOT_FOUND | No | Long term situation that likely not will change in the near future. Example: SDV can not find the specified resource (e.g., no path to get data for specified seat) |

| ALREADY_EXISTS | No | No explicit use case on server side in SDV identified |

| PERMISSION_DENIED | No | Operation rejected due to permission denied |

| RESOURCE_EXHAUSTED | Yes | Possibly if e.g., malloc fails or similar errors. |

| FAILED_PRECONDITION | Yes | Could be returned if e.g., operation is rejected due to safety reasons. (E.g., vehicle moving) |

| ABORTED | Yes | Could e.g., be returned if service does not support concurrent requests, and there is already either a related operation ongoing or the operation is aborted due to a newer request received. Could also be used if an operation is aborted on user/driver request, e.g., physical button in vehicle pressed. |

| OUT_OF_RANGE | No | E.g., Arguments out of range |

| UNIMPLEMENTED | No | To be used if certain use-cases of the service are not implemented, e.g., if recline cannot be adjusted |

| INTERNAL | No | Internal errors, like exceptions, unexpected null pointers and similar |

| UNAVAILABLE | Yes | To be used if the service is temporarily unavailable, e.g., during system startup. |

| DATA_LOSS | No | No explicit use case identified on server side in SDV. |

| UNAUTHENTICATED | No | No explicit use case identified on server side in SDV. |

Other references

1.3 - Vehicle App Manifest

Versions

- v1

- v2

- v3 (current)

Introduction

The AppManifest defines the properties of your Vehicle App and its functional interfaces (FIs).

FIs may be:

- required service interfaces (e.g. a required gRPC service interface)

- the used vehicle model and accessed data points.

- an arbitrary abstract interface description used by 3rd parties

In addition to required FIs, provided FIs can (and need) to be specified as well.

These defined interfaces are then used by the Velocitas toolchain to:

- generate service stubs for either a client implementation (required IF) or a server implementation (provided IF) (i.e. for gRPC)

- generate a source code equivalent of the defined vehicle model

Overview

The image below depicts the interaction between App Manifest and DevEnv Configuration at -development time- The responsibilities are clearly separated; the App Manifest describes the application and its interfaces whereas DevEnv Configuration (or .velocitas.json) defines the configuration of the development environment and all the packages used by the Velocitas toolchain.

Context

To fully understand the AppManifest, let’s have a look at who interacts with it:

Purpose

- Define the requirements of a Vehicle App in an abstract way to avoid dependencies on concrete Runtime and Middleware configurations.

- Description of your applications functional interfaces(VehicleModel, services, APIs, …)

- Enable loose coupling of functional interface descriptions and the Velocitas toolchain. Some parts of the toolchain are responsible for reading the file and acting upon it, depending on the type of functional interface

- Providing an extendable syntax to enable custom functional interface types which may not provided by the Velocitas toolchain itself, but by a third party

- Providing a single source of truth for generation of deployment specifications (i.e. Kanto spec, etc…)

Example

// AppManifest.json

{

"manifestVersion": "v3",

"name": "SampleApp",

"interfaces": [

{

"type": "vehicle-signal-interface",

"config": {

"src": "https://github.com/COVESA/vehicle_signal_specification/releases/download/v3.0/vss_rel_3.0.json",

"datapoints": {

"required": [

{

"path": "Vehicle.Speed",

"optional": "true",

"access": "read",

}

],

"provided": [

{

"path": "Vehicle.Cabin.Seat.Row1.Pos1.Position",

}

]

}

}

},

{

"type": "grpc-interface",

"config": {

"src": "https://raw.githubusercontent.com/eclipse-kuksa/kuksa-incubation/0.4.0/seat_service/proto/sdv/edge/comfort/seats/v1/seats.proto",

"required": {

"methods": [ "Move", "MoveComponent" ]

},

}

},

{

"type": "pubsub",

"config": {

"reads": [ "sampleapp/getSpeed" ],

"writes": [ "sampleapp/currentSpeed", "sampleapp/getSpeed/response" ]

}

}

]

}

The VehicleApp above has an:

-

interface towards our generated Vehicle Signal Interface based on the COVESA Vehicle Signal Specification . In particular, it requires read access to the vehicle signal

Vehicle.Speedsince the signal is marked as optional the application will work, even if the signal is not present in the system. Additionally, the application acts as a provider for the signalVehicle.Cabin.Seat.Row1.Pos1.Positionmeaning that it will take responsibility of reading/writing data directly to vehicle networks for the respective signal. -

interface towards gRPC based on the

seats.protofile. Since thedirectionisrequireda service client for theseatsservice will be generated who interacts with the Velocitas middleware. -

interface towards the

pubsubmiddleware and is reading to the topicsampleapp/getSpeedand writing the topicssampleapp/currentSpeed,sampleapp/getSpeed/response.

The example has no provided interfaces.

Structure

Describes all external properties and interfaces of a Vehicle Application.

Properties

| Property | Type | Required | Description |

|---|---|---|---|

manifestVersion |

string | Yes | The version of the App Manifest. |

name |

string | Yes | The name of the Vehicle Application. |

interfaces |

object [] | No | Array of all provided or required functional interfaces. |

Interfaces

Properties

| Property | Type | Required | Description |

|---|---|---|---|

type |

string | Yes | The type of the functional interface. |

config |

object | No | The configuration of the functional interface type. Content may vary between all types. |

Config

The configuration of the functional interface type. Content may vary between all types.

Refer to the JSON Schema of the current AppManifest here .

Visualization

Functional interface types supported by Velocitas

Here is a list of functional interface types directly supported by the Velocitas toolchain and which Velocitas CLI packages are exposing the support:

Support for additional interface types may be added by providing a 3rd party CLI package .

Planned, but not yet available features

Some FIs are dependent on used classes, methods or literals in your Vehicle App’s source code. For example the vehicle-model FI requires you to list required or provided datapoints. At the moment, these attributes need to be filled manually. There are ideas to auto-generate these attributes by analyzing the source code, but nothing is planned for that, yet.

Further information

- Tutorial: Quickstart

- Tutorial: Vehicle Model Creation

- Tutorial: Vehicle App Development

- Concept: Lifecycle Management

1.3.1 - Interfaces

1.3.1.1 - Vehicle Signal Interface

| Providing CLI package | Interface type-key |

|---|---|

devenv-devcontainer-setup |

vehicle-signal-interface |

The Vehicle Signal Interface formerly known as Vehicle Model interface type creates an interface to a signal interface described by the VSS spec. This interface will generate a source code package equivalent to the contents of your VSS JSON automatically upon devContainer creation.

If a Vehicle App requires a vehicle-signal-interface, it will act as a consumer of datapoints already available in the system. If, on the other hand, a Vehicle App provides a vehicle-signal-interface, it will act as a provider (formerly feeder in KUKSA terms) of the declared datapoints.

Furthermore, in the source code generated by this functional interface, a connection to

KUKSA Databroker

will be established via the configured Velocitas middleware. It uses the broker.proto if provided by the KUKSA Databroker to connect via gRPC. A seperate declaration of a grpc-interface for the databroker is NOT required.

More information: Vehicle Model Creation

Configuration structure

| Attribute | Type | Example value | Description |

|---|---|---|---|

src |

string | "https://github.com/COVESA/vehicle_signal_specification/releases/download/v3.0/vss_rel_3.0.json" |

URI of the used COVESA Vehicle Signal Specification JSON export. URI may point to a local file or to a file provided by a server. |

unit_src |

string | ["abs_path_unit_file_1", "abs_path_unit_file_2", "uri_unit_file_3"] |

An array of URI’s/absolute path’s of the used COVESA Vehicle Signal Specification unit file(s) in yaml format. URI may point to a local file or to a file provided by a server. If none is provided a default one will be used ( https://github.com/COVESA/vehicle_signal_specification/blob/v4.0/spec/units.yaml) . |

datapoints |

object | Object containing both required and provided datapoints. | |

datapoints.required |

array | Array of required datapoints. | |

datapoints.required.[].path |

string | Vehicle.Speed |

Path of the VSS datapoint. |

datapoints.required.[].optional |

boolean? | true, false |

Is the datapoint optional, i.e. can the Vehicle App work without the datapoint being present in the system. Defaults to false. |

datapoints.required.[].access |

string | read, write |

What kind of access to the datapoint is needed by the application. |

datapoints.provided |

array | Array of provided datapoints. | |

datapoints.provided.[].path |

string | Vehicle.Cabin.Seat.Row1.Pos1.Position |

Path of the VSS datapoint. |

Example

{

"type": "vehicle-signal-interface",

"config": {

"src": "https://github.com/COVESA/vehicle_signal_specification/releases/download/v3.0/vss_rel_3.0.json",

"datapoints": {

"required": [

{

"path": "Vehicle.Speed",

"access": "read"

},

{

"path": "Vehicle.Body.Horn.IsActive",

"optional": true,

"access": "write"

}

],

"provided": [

{

"path": "Vehicle.Cabin.Seat.Row1.Pos1.Position"

}

]

}

}

}

Different VSS versions

The model generation is supported for VSS versions up to v4.0. There are some changes for some paths from v3.0 to v4.0. For example Vehicle.Cabin.Seat.Row1.Pos1.Position in v3.0 is Vehicle.Cabin.Seat.Row1.DriverSide.Position in v4.0. If you are using the mock provider you would need to take that into account when you sepcify your mock.py.

1.3.1.2 - gRPC Service Interface

| Providing CLI package | Interface type-key |

|---|---|

devenv-devcontainer-setup |

grpc-interface |

Description

This interface type introduces a dependency to a gRPC service. It is used to generate either client stubs (in case your application requires the interface) or server stubs (in case your application provides the interface). The result of the generation is a language specific and package manager specific source code package, integrated with the Velocitas SDK core.

If a Vehicle App requires a grpc-interface - a client stub embedded into the Velocitas framework will be generated and added as a build-time dependency of your application. It enables you to access your service from your Vehicle App without any additional effort.

If a Vehicle App provides a grpc-interface - a server stub embedded into the Velocitas framework will be generated and added as a build-time dependency of your application. It enables you to quickly add the business logic of your application.

Configuration structure

| Attribute | Type | Example value | Description |

|---|---|---|---|

src |

string | "https://raw.githubusercontent.com/eclipse-kuksa/kuksa-incubation/0.4.0/seat_service/proto/sdv/edge/comfort/seats/v1/seats.proto" |

URI of the used protobuf specification of the service. URI may point to a local file or to a file provided by a server. It is generally recommended that a stable proto file is used. I.e. one that is already released under a proper tag rather than an in-development proto file. |

required.methods |

array | Array of service’s methods that are accessed by the application. In addition to access control the methods attribute may be used to determine backward or forward compatibility i.e. if semantics of a service’s interface did not change but methods were added or removed in a future version. | |

required.methods.[].name |

string | "Move", "MoveComponent" |

Name of the method that the application would like to access |

provided |

object | {} |

Reserved object indicating that the interface is provided. Might be filled with further configuration values. |

Execution

velocitas init

or

velocitas exec grpc-interface-support generate-sdk

Project configuration

{

"type": "grpc-interface",

"config": {

"src": "https://raw.githubusercontent.com/eclipse-kuksa/kuksa-incubation/0.4.0/seat_service/proto/sdv/edge/comfort/seats/v1/seats.proto",

"required": {

"methods": [

"Move", "MoveComponent"

]

},

"provided": { }

}

}

You need to specify devenv-devcontainer-setup >= v2.4.2 in your project configuration. Therefore your .veloitas.json should look similair to this example:

{

"packages": {

"devenv-devcontainer-setup": "v2.4.2"

},

"components": [

{

"id": "grpc-interface-support",

}

],

}

To do that you can run velocitas component add grpc-interface-support when your package is above or equal to v2.4.2

1.3.1.3 - Publish Subscribe

| Providing CLI package | Interface type-key |

|---|---|

devenv-runtimes |

pubsub |

Description

This interface type introduces a dependency to a publish and subscribe middleware. While this may change in the future due to new middlewares being adopted, at the moment this will always indicate a dependency to MQTT.

If a Vehicle App requires pubsub - this will influence the generated deployment specs to include a publish and subscribe broker (i.e. an MQTT broker).

If a Vehicle App provides pubsub - this will influence the generated deployment specs to include a publish and subscribe broker (i.e. an MQTT broker).

Configuration structure

| Attribute | Type | Example value | Description |

|---|---|---|---|

reads |

array[string] | [ "sampleapp/getSpeed" ] |

Array of topics which are read by the application. |

writes |

array[string] | [ "sampleapp/currentSpeed", "sampleapp/getSpeed/response" ] |

Array of topics which are written by the application. |

Example

{

"type": "pubsub",

"config": {

"reads": [ "sampleapp/getSpeed" ],

"writes": [ "sampleapp/currentSpeed", "sampleapp/getSpeed/response" ]

}

}

2 - Deployment Model

The Velocitas project uses a common deployment model. It uses OCI-compliant containers to increase the flexibility for the support of different programming languages and runtimes, which accelerates innovation and development. OCI-compliant containers also allow for a standardized yet flexible deployment process, which increases the ease of operation. Using OCI-compliant is portable to different architectures as long as there is support for OCI-compliant containers on the desired platform (e.g., like a container runtime for arm32, arm64 or amd64).

Guiding principles

The deployment model is guided by the following principles

- Applications are provided as OCI-compliant container images.

- The container runtime offers a control plane and API to manage the container lifecycle.

The template projects provided come with a pre-configured developer toolchain that accelerates the development process. The developer toolchain ensures an easy creation through a high-degree of automation of all required artifacts needed to follow the Velocitas principles.

Testing your container during development

The Velocitas project provides for developers a repository template and devcontainer that contains everything to build a containerized version of your app locally and test it. Check out our tutorial e.g., for the Python template to learn more.

Automated container image builds

Velocitas uses GitHub workflows to automate the creation of your containerized application. A workflow is started with every increment of your application code that you push to your GitHub repository. The workflow creates a containerized version of your application and stores this container image in a registry. Further actions are carried out using this container (e.g., integration tests).

The workflows are set up to support multi-platform container creation and generate container images for amd64 and arm64 out of the box. This provides a great starting point for developers and lets you add additional support for further platforms easily.

Further information

2.1 - Build and Release Process

The Velocitas project provides a two-stage process for development, continuous integration, and release of a new version of a Vehicle App.

-

Stage 1 - Build & Test On every new push to the

mainbranch or every update to a pull request, a GitHub workflow is automatically executed to build your application as a container (optionally for different platforms), runs automated tests and code quality checks, and stores all results as GitHub artifacts for future reference with a default retention period of 90 days .The workflow provides a quick feedback during development and improves efficient collaboration.

-

Stage 2 - Release Once the application is ready to be released in a new version, a dedicated release workflow is automatically executed as soon as you create a new release via GitHub.

The release workflow bundles all relevant images and artifacts into one tagged set of files and pushes it to the GitHub Container Registry. In addition, all the information needed for quality assurance and documentation are published as release artifacts on GitHub. The image pushed to the GitHub Container Registry can afterwards be deployed on your target system using the Over-The-Air (OTA) update system of your choice.

The drawing below illustrates the different workflows, actions and artifacts that are automatically created for you. All workflows are intended as a sensible baseline and can be extended and adapted to the needs of your own project.

CI Workflow ( ci.yml )

The Continuous Integration (CI) workflow is triggered on every commit to the main branch or when creating/updating a pull request and contains a set of actions to achieve the following objectives:

- Building a container for the Vehicle App - actions create a containerized version of the Vehicle App.

- Scanning for vulnerabilities - actions scan your code and container for vulnerabilities and in case of findings the workflow will be marked as “failed”.

- Running integration tests - actions provision a runtime instance and deploy all required services as containers together with your containerized application to allow for automatically executing integration test cases. In case the test cases fail, the workflow will be marked as “failed”.

- Running unit tests & code coverage - actions run unit tests and calculate code coverage for your application, in case of errors or unsatisfactory code coverage, the workflow will be marked as “failed”.

- Storing scan & test results as GitHub action artifacts - actions store results from the previously mentioned actions for further reference or download as Github Action Artifacts.

Check out the example GitHub workflows in our template repository for Python

Build multi-arch image Workflow ( build-multiarch-image.yml )

The Build multi-arch image workflow is triggered on every commit to the main branch and contains a set of actions to achieve the following objectives:

- Building a multi-arch container for the app - actions create a containerized version of the Vehicle App for multiple architectures (currently AMD64 and ARM64).

- Scanning for vulnerabilities - actions scan your code and container for vulnerabilities and in case of findings the workflow will be marked as “failed”.

- Storing container images to GitHub action artifacts - at the end of the workflow, the container image created is stored in a Github Action Artifacts so that it can be referenced by the Release Workflow later.

Release Workflow ( release.yml )

The Release workflow is triggered as soon as the main branch is ready for release and the Vehicle App developer creates a new GitHub release. This can be done manually through the GitHub UI.

On creating a new release with a specific new version, GitHub creates a tag and automatically runs the Release workflow defined in .github/workflows/release.yml, given that CI workflow has run successfully for the current commit on the main branch.

The set of actions included in the Release workflow cover the objective:

- Generating and publishing QA information - actions load the QA information from GitHub artifacts stored for the same commit reference and verify it. Additionally, release documentation is generated and added to the GitHub release. If there is no information available for the current commit, the release workflow will fail.

- Publish as GitHub pages - all information from the release together with the project documentation is built as a static page using hugo. The result is pushed to a separate branch and can be published as a GitHub page in your repository.

- Pull & label container image - actions pull the Vehicle App container image based on the current commit hash from the GitHub artifacts and label it with the specified tag version. If the image cannot be found, the workflow will fail.

- Push container image to ghcr.io - finally the labeled container image is pushed to the GitHub container registry and can be used as a deployment source.

GitHub Actions artifacts

GitHub Actions artifacts are used for storing data, which is generated by the CI workflow and referenced by the Release workflow. This saves time during workflow runs because we don’t have to create artifacts multiple times.

GitHub Actions artifacts always have a retention period, which is 90 days by default. This may be configured differently in the specific GitHub organization. After this period, the QA info gets purged automatically. In this case, a re-run of the CI workflow would be required to regenerate all QA info needed for creating a release.

Container Registry

The

GitHub container registry

is used for storing container images pushed by the Release workflow. These images can easily be used for a deployment and don’t have a retention period.

Since the registry does not have an automatic cleanup, it keeps container images as long as they are not deleted. It is recommended that you automate the removal of older images to limit storage size and costs.

Versioning

Vehicle App image versions are set to the Git tag name during release. Though any versioning scheme can be adopted, the usage of semantic versions is recommended.

If the tag name contains a semantic version, the leading v will be trimmed.

Example: A tag name of v1.0.0 will lead to version 1.0.0 of the Vehicle App container.

Maintaining multiple versions

If there is a need to maintain multiple versions of a Vehicle App, e.g., to hotfix the production version while working on a new version at the same time or to support multiple versions in production, create and use release branches.

The release process would be the same as described in the overview, except that a release branch (e.g., release/v1.0) is created before the release step and the GitHub release is based on the release branch rather than the main branch. For hotfixes, release branches may be created retroactively from the release tag, if needed.

Further information

- Tutorial: How to write integration tests

3 - Lifecycle Management

Introduction

Once a repository has been created from one of our Vehicle App templates, basically the only way to receive updates into your derived repository is to manually pull changes, which would be quite tedious and error prone. This is where our Lifecycle Management comes to the rescue!

All of our main components of the development environment, like

- tools

- runtimes

- devcontainer configuration and setup

- build systems

- CI workflows

are (or will be) provided as versioned packages which can be updated individually, if required.

The driver for this is our Velocitas CLI which is our package manager for Vehicle App repositories.

Overview

Here we can see how the MyVehicleApp repository references package repositories by Velocitas, customer specific packages and some packages from a totally different development platform (Gitee).

If you want to learn more about how to reference and use packages check the sections for project configuration and packages .

Lifecycle Management flow

3.1 - Project Configuration

Every Vehicle App repo comes with a

.velocitas.json

which is the project configuration of your app. It holds references to the packages and their respective versions as well as components you are using in your project.

Here is an example of this configuration:

{

"packages": {

"devenv-runtimes": "v3.1.0",

"devenv-devcontainer-setup": "v2.1.0"

},

"components": [

"runtime-local",

"devcontainer-setup",

"vehicle-signal-interface",

"sdk-installer",

"grpc-interface-support"

],

"variables": {

"language": "python",

"repoType": "app",

"appManifestPath": "app/AppManifest.json",

"githubRepoId": "eclipse-velocitas/vehicle-app-python-template",

"generatedModelPath": "./gen/vehicle_model"

},

"cliVersion": "v0.9.0"

}

More detailed information and explanation about the project configuration and fields of the .velocitas.json can be found

here

.

Next steps

- Lifecycle Management: Usage of Packages

- Lifecycle Management: Development of Packages

3.2 - Velocitas CLI

Background

Our Velocitas CLI is introduced to support the process of the lifecycle of a Vehicle App as a project manager.

Commands

You can find all information about available commands here .

CLI Flow examples

velocitas create

Create a new Velocitas Vehicle App project.

Note

velocitas create needs to be executed inside our generic

vehicle-app-template (inside the devcontainer) where a so called

package-index.json

is located for now, which is a central place of defining our extension and core packages with their respective exposed interfaces.

vscode ➜ /workspaces/vehicle-app-template (main) $ velocitas create

Interactive project creation started

> What is the name of your project? MyApp

> Which programming language would you like to use for your project? (Use arrow keys)

❯ python

cpp

> Would you like to use a provided example? No

> Which functional interfaces does your application have? (Press <space> to select, <a> to toggle all, <i> to invert selection, and <enter> to proceed)

❯◉ Vehicle Signal Interface based on VSS and KUKSA Databroker

◯ gRPC service contract based on a proto interface description

...

Config 'src' for interface 'vehicle-signal-interface': URI or path to VSS json (Leave empty for default: v3.0)

...

velocitas init

Download packages configured in your .velocitas.json to

VELOCITAS_HOME

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas init

Initializing Velocitas packages ...

... Downloading package: 'devenv-runtimes:v1.0.1'

... Downloading package: 'devenv-github-workflows:v2.0.4'

... Downloading package: 'devenv-github-templates:v1.0.1'

... Downloading package: 'devenv-devcontainer-setup:v1.1.7'

Running post init hook for model-generator

Running 'install-deps'

...

Single Package Init

Single packages can also easily be initialized or re-initialized using the package parameter -p / --package and the specifier parameter -s / --specifier. The specifier parameter can be either a git tag or a git hash. If the specifier parameter is omitted either the version defined in .velocitas.json resp. the latest version of the specified package will be used automatically. After initialisation the package and it’s resolved version will be written to .velocitas.json. If the package already exists in .velocitas.json, however the versions differ it will be automatically updated to the specified version. If no components from the specified package are added to .velocitas.json all components from this package are automatically written to it.

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas init -p devenv-runtimes -s v3.0.0

Initializing Velocitas packages ...

... Package 'devenv-runtimes:v3.0.0' added to .velocitas.json

... Downloading package: 'devenv-runtimes:v3.0.0'

... > Running post init hook for ...

...

velocitas sync

If any package provides files they will be synchronized into your repository.

Note

This will overwrite any changes you have made to the files manually! Affected files are prefixed with an auto generated notice:vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas sync

Syncing Velocitas components!

... syncing 'devenv-github-workflows'

... syncing 'devenv-github-templates'

... syncing 'devenv-devcontainer-setup'

velocitas upgrade

Updates Velocitas components.

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas upgrade --dry-run [--ignore-bounds]

Checking .velocitas.json for updates!

... devenv-devcontainer-setup:vx.x.x → up to date!

... devenv-runtimes:vx.x.x → vx.x.x

... devenv-github-templates:vx.x.x → up to date!

... devenv-github-workflows:vx.x.x → up to date!

velocitas package

Prints information about packages.

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas package devenv-devcontainer-setup

devenv-devcontainer-setup

version: v1.1.7

components:

- id: devcontainer-setup

type: setup

variables:

language

type: string

description: The programming language of the project. Either 'python' or 'cpp'

required: true

repoType

type: string

description: The type of the repository: 'app' or 'sdk'

required: true

appManifestPath

type: string

description: Path of the AppManifest file, relative to the .velocitas.json

required: true

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas package devenv-devcontainer-setup -p

/home/vscode/.velocitas/packages/devenv-devcontainer-setup/v1.1.7

velocitas exec

Executes a script contained in one of your installed components.

vscode ➜ /workspaces/vehicle-app-python-template (main) $ velocitas exec runtime-local run-vehicledatabroker

#######################################################

### Running Databroker ###

#######################################################

...

More detailed usage can be found at the Velocitas CLI README .

Additional Information

Cache Usage

The Velocitas CLI supports caching data for a Vehicle App project.

The cache data makes it easy for any script/program of a component to read from or write to.

More detailed information about the Project Cache can be found

here

.

Built-In Variables

The Velocitas CLI also creates default environment variables which are available to every script/program.

| variable | description |

|---|---|

VELOCITAS_WORKSPACE_DIR |

Current working directory of the Vehicle App |

VELOCITAS_CACHE_DIR |

Vehicle App project specific cache directory. e.g, ~/.velocitas/cache/<generatedMd5Hash> |

VELOCITAS_CACHE_DATA |

JSON string of ~/.velocitas/cache/<generatedMd5Hash>/cache.json |

VELOCITAS_APP_MANIFEST |

JSON string of the Vehicle App AppManifest |

More detailed information about Built-In Variables can be found here .

Next steps

- Lifecycle Management: Troubleshooting

3.3 - Phases

3.3.1 - Create

To be filled.

Template based creation flow

Bootstrapping creation flow

Resulting Velocitas CLI and Velocitas Package changes

-

velocitas createcommand shall be introduced- it will guide through the project creation process, allowing the developer to add APIs and services at creation time which will reference the correct Velocitas CLI packages (either provided by Velocitas or by a 3rd party).

- in addition to an interactive mode where create is invoked without arguments, there shall be a CLI mode where all of the arguments shall be passable as arguments

-

Packages need to be available in a central registry (i.e. a new git repository) otherwise step 3 (depicted below) is not possible.

-

Packages need to expose which dependency types they are providing in their manifest. For each dependency type a human readable name for the type shall be exposed.

Interaction mockup

> velocitas create

... Creating a new Velocitas project!

> What is the name of your project?

MyApp

> 1. Which programming language would you like to use for your project?

[ ] Python

[x] C++

> 2. Which integrations would you like to use? (multiple selections possible)

[x] Github

[x] Gitlab

[ ] Gitee

> 3. Which API dependencies does your project have?

[x] gRPC service

[ ] uProtocol service

> 4. Add an API dependency (y/n)?

y

> 5. What type of dependency?

[x] gRPC-IF

> 6. URI of the .proto file?

https://some-url/if.proto

> 7. Add an(other) API dependency (y/n)?

n

... Project created!

Arguments mockup:

$ velocitas create \

--name MyApp \

--lang cpp \

--package grpc-service-support \

--require grpc-interface:https://some-url/if.proto

> Project created!

3.4 - Packages

3.4.1 - Usage

Overview

After you have set up the .velocitas.json for your

project configuration

, using packages is pretty straight forward.

Currently, the packages provided by the Velocitas team are the following:

| name | description |

|---|---|

| devenv-runtimes | Containing scripts and configuration for Local and Kanto Runtime Services |

| devenv-devcontainer-setup | Basic configuration for the devcontainer, like proxy configuration, post create scripts, entry points for the lifecycle management. |

| devenv-github-workflows | Containing github workflow files used by velocitas repositories |

| devenv-github-templates | Containing github templates used by velocitas repositories |